You use Decision Validation Services to test and simulate rulesets in Rule Designer and Decision Center.

Enable the ability to run tests and simulations from the Decision Center Enterprise console and remote testing from Rule Designer.

Customize testing and simulation features for your specific needs.

Testing and simulation in Decision Validation Services give users the ability to validate usage scenarios to ensure correctness of rulesets, or to see the potential impact of changes to rules.

Central to both testing and simulation, scenarios represent real or fictitious input data to rule execution, and are stored in Excel or another format when appropriate. During testing or simulation, you submit scenarios to a Scenario Service Provider (SSP), either locally in Rule Designer or configured within Rule Execution Server for remote testing. In either case, the SSP returns a report of the results of rule execution on the scenarios.

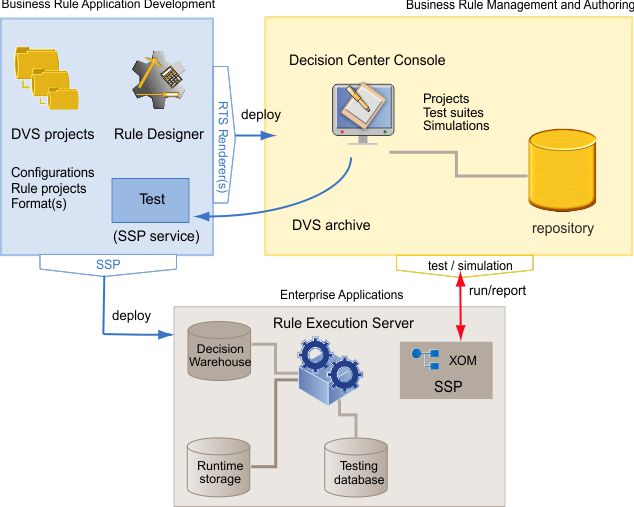

Getting familiar with testing and simulation: For basic comprehension of how scenarios are used in conjunction with test suites and simulation artifacts in the Enterprise console, and with launch configurations in Rule Designer.

Testing rules in Rule Designer: Describes how to use Rule Designer to test and debug the execution of rulesets on your scenarios.

Enabling remote testing: Describes what you must do to enable remote testing in both Rule Designer and Decision Center.

You can also extend the formats to add KPIs to interpret the results of simulations, and to change how scenarios are provided to the SSP. Customizing activities revolve around the DVS project, which is a container in Rule Designer that holds individual customizations, and is used to repackage the Decision Center and SSP .ear or .war files.

Testing how rulesets execute

It is a good practice to test rules before giving the "go ahead" to deploy them to a production environment. Decision Validation Services is designed for developers, QA teams, and business users to create testing and simulation solutions based on a number of use cases and scenarios. These solutions are used to validate correctness and effectiveness of rulesets. The ability to test scenarios gives business users confidence to make rule changes because they can be assured that the changes they make have the required results.

There are additional considerations when business users are responsible for authoring and testing rules. The default Excel scenario template often requires customization so that test scenarios are easier to populate. For example, in rule projects where the ruleset parameters contain complex objects with collections or nested collections, the scenario file template might not be a usable format for entering test scenario input data. In other cases, the generated column names for the input data in the scenario file template are non-descriptive. This happens when the ruleset input parameters (used to generate the column names) include BOM classes with the default constructor arguments.

Rule Designer: to enable testing and simulation, and to test.

Decision Center: to run test suites and simulations from the Enterprise console, and to validate the rulesets.

Rule Execution Server: to audit execution traces stored in Decision Warehouse.

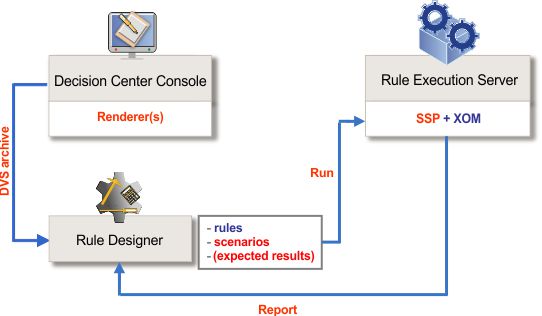

Rule Designer is the module developers use to test business rules against test scenarios. The following figure shows how Decision Validation Services work in Rule Designer by connecting to a server that has Rule Execution Server and a Scenario Service Provider (SSP) configured:

A developer is likely to use Rule Designer for testing rulesets when the results of running a test suite in Decision Center are not as expected and the reasons for the difference are not obvious. Rule Designer is much better suited than Decision Center to debugging test suites. With debugging support, developers can step into the rules and inspect the computed data to resolve the unexpected error or problem. Business users can provide developers with a DVS archive so that the developers can run the tests inside Eclipse. With Eclipse, the developers can run the test suites in a more controlled environment and analyze the execution and the results in greater detail.

define a rule project with the correct ruleset parameters (data for the scenarios)

validate the business object model (BOM) and generate an Excel template to ensure that the column names are displayed as expected

set up a test server or use the SSP for a fully local (Java™ SE) implementation

Developers can create test scenario spreadsheets and run test suites locally or remotely with the testing server (Scenario Service Provider) that calls Rule Execution Server to execute the test scenarios. In Rule Designer, developers can also integrate ruleset testing with JUnit. After the JUnit classes have been implemented, a continuous integration tool, such as Cruise Control, can provide notification of regressions on nightly builds and allow developers or testing engineers to catch errors and detect problems quickly.

Developers can carry out various customizations to improve the testing experience for business users.

Developers can customize the default Excel scenario file template to minimize the amount of data required for running a test scenario. The data that is not critical from the business perspective can be generated or populated with code so that business users only provide the data of interest to define the test scenario. When customizing the default scenario template, developers can easily test the template generation from within Rule Designer without deploying the customized format. If the scenario data resides in XML files or in an external database, the developer can use the API of Decision Validation Services to populate the Excel file with this external data. Business users can then focus on defining the test and only modifying data values that are relevant to the test.

presenting the required scenario data differently

providing custom tests

changing the report format to highlight important results

For example, if a particular scenario format is necessary to test rules, developers create a custom scenario provider. In Rule Designer, it is possible to define how scenario data (input) is requested. This could be a non–Excel data source, a different Excel format, or it could be a database or an XML source. As a result, it is also necessary to customize how test execution is processed.

configuration of the Scenario Service Provider servers

configuration of the rule projects to retrieve the XOMs

customization of a formats used to enter scenarios for testing

A configuration task, which requires developers to add references to rule projects, so that the XOM used can be packaged into SSP, and to select the target deployment application server and Rule Execution Server credentials for remote testing.

A customization task to declare a custom scenario provider, which includes defining a format.

A format includes the following extension points:a scenario provider, which declares the format of the scenario data and the tests

a scenario provider renderer

expected results and expected execution details reported

The new scenario provider declares how to access the scenarios used in test suites. Decision Center uses a renderer class to display all the elements defined in the SSP format. The Decision Center Enterprise console must be able to display the new format of the custom scenario service provider. Therefore, developers must repackage and deploy the Decision Center EAR or WAR and make the custom scenario provider and related customizations available to SSP. For example, if the Excel format is not suitable because there are too many fields that are not relevant for testing, developers can design a JavaServer Pages form with the Decision Center renderer class to limit the user inputs.

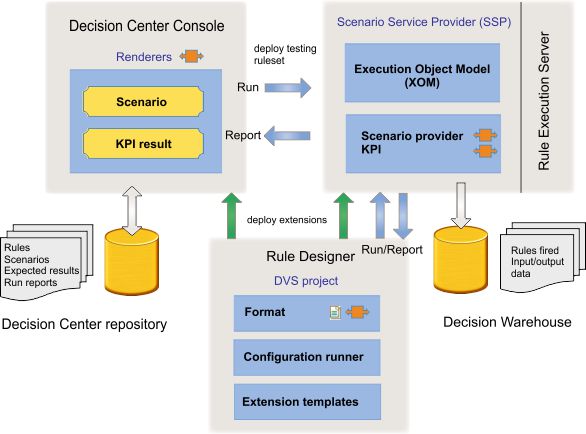

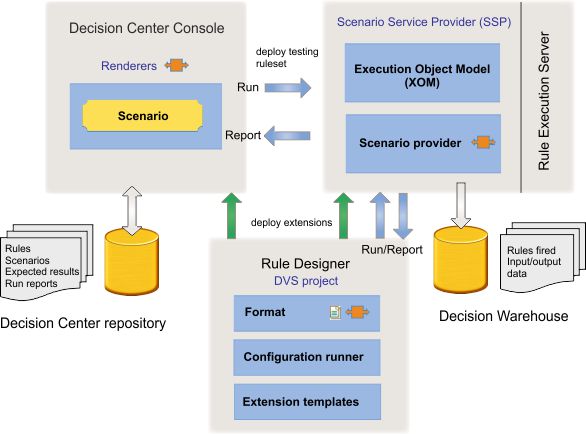

The following figure shows these tasks in the Decision Validation Services project in Rule Designer and the subsequent deployment of the repackaged EAR or WAR files to the application servers where Decision Center and Rule Execution Server are deployed.

Simulating how rulesets execute

Simulation is used to check that business rules yield the expected business decision. Simulation means executing a ruleset against a sufficiently large volume of realistic scenarios, usually historical data, to be able to evaluate the business decision considered with reference to other scenarios. Use simulations to compare the results of running an updated set of rules against a typical scenario versus running the existing set of rules against other typical scenarios. For example, if a mortgage company wants to change its loan approval rules to make them stricter, the company can target decisions in which fewer applications are approved, or compare the payment history of the loans approved by the new rules with the payment history of the loans that were approved by the old rules but would be rejected by the new rules.

For a meaningful comparison, simulations measure selected key performance indicators (KPIs) against the results of execution. Each simulation can evaluate multiple KPIs. Incremental computation of KPIs (as each scenario is processed) provides high scalability and performance for typically large simulation runs.

Policy managers can analyze aggregate decisions and create reports from the results. They can do this in Excel or other Business Intelligence tools.

Simulation reports provide some answers to the "what if" questions and doubts users have on proposed rule changes. Users can do this "what if" analysis against their own historical data, so that they can gain valuable insight into the potential impact of their changes.

Most realistic simulations require a custom scenario provider. A custom scenario provider is likely to use data directly from production databases that store large data volumes (thousands to potentially millions of scenarios).

connect to historical data in existing databases

allow business users to extract subsets of historical data

directly simulate results to familiar tools

use the same customized SSP and Decision Center for test suites and simulations

execute a simulation in parallel by dividing up the scenarios of a simulation into a number of parts using a list of scenario suites for each part

configuration of the SSP server

configuration of the rule projects to retrieve the XOM

customization of a format(s) used to enter scenarios and KPI with the following extension points:

a scenario provider

a scenario provider renderer

KPI

a KPI result aggregator KPI in the case of parallel execution

KPI result renderer

expected results reported

The deployment of a custom scenario provider for simulations is very similar to the process for testing, except for the inclusion of domain-specific KPIs to measure the business decisions that are critical to simulations.

The following figure shows these tasks in the DVS project in Rule Designer and the subsequent deployment of the repackaged EAR or WAR files to the application servers where Decision Center and Rule Execution Server are deployed: