Testing and simulation in the Enterprise console

Decision Center provides testing and simulation capabilities that you can run against your rules.

Testing helps you to validate that the rules you create or edit achieve the results you expect. You run a simulation to assess the impact of potential rule changes.

Testing and simulation capabilities are based on usage scenarios, which you create and then upload to Decision Center. You then use these scenarios as part of a test suite or simulation:

- Test Suites

A test suite compares the results you expect to have with the actual results obtained when applying rules on your scenarios.

- Simulations

Simulations indicate how rule changes impact the business. You usually run a simulation on a large set of scenarios for better key performance indicator (KPI) analysis. You often derive simulations from historical data.

You can run test suites or simulations to provide feedback on the performance of a set of rules without impacting the rules or the applications on which they act.

The results produce a report. You can display side-by-side two reports that have the same format, for champion-challenger comparisons.

Scenarios

Scenarios represent real or fictitious use cases that you use to validate the behavior of your rules. Each scenario contains all the information required for your rules to execute properly.

For example, if a loan application requires a certain amount of information to process the request, each scenario must provide all of this information:

Borrower — Sam Adams

Credit Score — 600

Yearly Income — 80000

Duration — 24 (months)

Amount — 100000

Yearly Interest Rate — 5 (%)

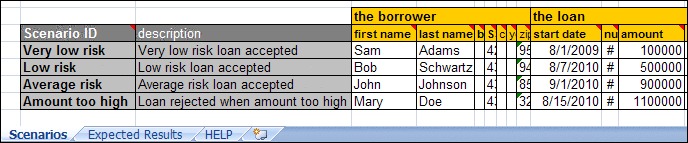

You create scenarios in Microsoft Excel. In the Excel format, each row represents a scenario and the columns indicate where to put the data for each scenario. For example, the following Excel sheet contains four scenarios that validate loan risk from a very low risk loan to a loan where the amount is too high:

Test Suites

You run test suites to verify that your rules are correctly designed and written, by comparing the expected results, that is, how you expect your rules to behave, and the actual results obtained when applying rules with the scenarios that you have defined.

You set up the expected results in Excel, in a separate sheet alongside your scenarios.

The example above tests two different aspects of the loan request:

Whether the loan will be approved

Which message the loan request application generates

This Expected Results sheet represents these two tests as columns. You specify what you expect the results to be for all the scenarios necessary to cover the validation of your rules.

The test suite returns a report comparing your expected results with the actual results of the execution. Each test in a test suite is successful if the expected results match the actual results.

You can include the Expected Execution Details sheet for more technical tests, for example on the list of rules executed or the duration of the execution.

Simulations

You use simulations to perform what-if analysis against realistic data to evaluate the potential impact of changes you might want to make to your rules. This data corresponds to your usage scenarios, and often comes from historical databases that contain real customer information.

A simulation returns a report providing a business-relevant interpretation of the results, based on KPIs defined within your development team. For example, the following figure shows a simulation report that indicates that if you use this set of rules, the percentage of approved loans is 60%:

You can also send the generated information to Excel or other business intelligence tools for you to compile and analyze.