White Papers

Abstract

This document explains how to increase the available network bandwidth from 10GBit/s to 20GBit/s by using a combination of link aggregation on the accelerator hosts and per-connection multipath on all configured z/OS LPARs.

Content

Introduction

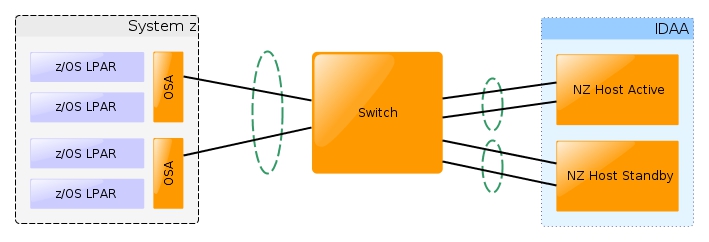

IBM DB2 Analytics Accelerator uses an active-backup network configuration as described in the network configurations document as its default configuration. In this configuration, only one of the two links between the switch and the accelerator appliance is utilized to transmit data. Furthermore, only one of the OSA cards in the Central Processing Complex (CPC) is used to send data. It is possible to increase the available network bandwidth from 10GBit/s to 20GBit/s by leveraging both OSA cards in a CPC using multipath routing and by implementing an active-active network connection between the switch and IBM DB2 Analytics Accelerator using the Link Aggregation Control Protocol (LACP).

Network Setup

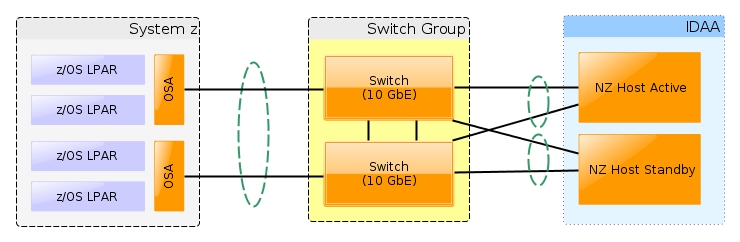

There are two recommended setups - a basic and a high-availability setup. Depending on the high-availability requirements of the IBM DB2 Analytics Accelerator installation, either one of the setups can be implemented.

Using the basic setup, the system can tolerate an outage of one of the OSA cards, a failover from the active to the standby host, as well as an outage of one of the 2 links from each component to the switch. In such a case, the system will fallback to the regular 10GBit/s bandwidth.

In contrast to the basic setup, the high-availability setup can also tolerate the outage of one of the switches, thus offering greater availability. It is the recommended setup for production environments. However, special switch support is required to support an active-active network environment:

- LACP support: The first requirement for the switch is general LACP support as well as the possibility to customize the LACP link selection policy, given that the standard policy is insufficient. The minimum requirement for a link selection policy is that it has to take the source and destination TCP port into account. However, policies that take additional information into account will work too.

- Virtual Switch Support: The second requirement is support for creating a virtual switch that consists of both physical switches, so that both switches look like a single switch to the clients connected to the switch. There are multiple vendor specific implementations of this feature: For Juniper switches this feature is called Virtual Chassis Port Link Aggregation (VCP), for BLADE-OS switches it is called Virtual Link Aggregation Groups (VLAG), and for Cisco switches it is called Virtual Port Channel (vPC). As the Link Aggregation Control Protocol mandates that both cables of a client have to be connected to the same switch, this feature is required. Without it, it would not be possible to implement a high-availability setup.

Configuration Changes

In order to implement an active-active topology, not only the network topology has to be adapted, but the configuration has to be changed too. Changes have to be made to the TCPIP configuration on System z, to the network configuration on Linux, and to the switch(es) between System z and the IBM DB2 Analytics Accelerator appliance.

System z configuration

Multipath has to be enabled in the TCPIP profile. This can either be done globally or only for a given route. To do so globally, the following statement can be used:

- IPCONFIG MULTIPATH PERCONNECTION

For more information on the IPCONFIG statement, see the IPCONFIG statement. It is also possible to only enable multipath for certain routes via a policy-based route table.

IBM DB2 Analytics Accelerator configuration

On the IBM DB2 Analytics Accelerator side, the network configuration has to be changed to ensure that an active-active connection is used to exchange data between the accelerator and System z and not an active-backup connection. These changes are made by IBM Support.

Note that the change on the accelerator side and on the switch side have to be done at the same time, otherwise it will not be possible to communicate with the accelerator any longer. You should activate the active-active configuration on one host at a time, beginning with the standby host and then the active host. This way you can be certain that there is always a working network connection between System z and the accelerator.

Switch configuration

For LACP to work, a Link Aggregation Group (LAG) has to be defined on the switch for both of the hosts of the accelerator appliance. To define a LAG on the switch, two or more ports have to be defined as group members. Both cables of accelerator host 1 have to be connected to the member ports of LAG 1 and both cables of accelerator host 2 have to be connected to the member ports of LAG 2. Both LAGs have to be configured with MTU size 8992. The members of a LAG must reside on the same switch except if both switches support VLAG/vPC/VCP. This is a requirement in a high-availability setup.

Some switches implement a feature where ports in the same module/chassis are preferred during LACP link selection (called "link-local" for Juniper switches), which adds a bias to the selection policy. While this is a helpful feature in general, the resulting behavior is not desired in this use case. Thus, precautions must be taken when configuring the switch to avoid this behavior. Ways to do so are to:

- Put all System z links and all accelerator links into the same chassis/module

- Put all System z links in one chassis/module and all accelerator links in another chassis/module

- Put all System z links in one chassis/module and both links of one accelerator host in the same chassis/module and the links of the other accelerator host in another chassis/module

Configuration Verification

In order to verify that the configuration changes have taken affect and work as intended, you should verify the setup by using the ACCEL_TEST_CONNECTION stored procedure. During verification, no other operations should be running on the accelerator to ensure that the measured results are not influenced by other factors.

Preparation

Prior to running the ACCEL_TEST_CONNECTION stored procedure, you must make a note of the number of outbound packets for both OSA cards, in other words the number of bytes sent by both members of the LAGs. The number of outbound packets for both OSA cards can be determined using the following command:

D TCPIP,,N,DEVL

The process by which the number of bytes that were sent by both members of the LAGs is determined depends on the switch manufacturer, hence cannot be described in a general way.

Test run

As a next step, the ACCEL_TEST_CONNECTION stored procedure must be run by entering the following input XML (the accelerator name TESTACC1 must be substituted by the real accelerator name):

<aqttables:diagnosticCommand xmlns:aqttables="

<networkSpeed accelerator="TESTACC1" totalNumberBytes="1000000000" dataBatchSize="320000" parallelConnections="20" seed="12345"/>

</aqttables:diagnosticCommand>

This transfers a total of 20GB of data from System z to the accelerator using 20 individual TCP connections, each connection transferring 1GB. In a working active-active setup, each of the 2 OSA cards transfers 10GB of data, and each LAG member transfers approximately half of the total data. As the LACP link selection policy is based on a hashing algorithm, the data might not be distributed evenly across the LAG members, however the distribution should not be overly uneven.

Verification

To verify that the setup works as expected, make a note of the packets that were sent by both OSA cards, as well as the number of bytes that were transferred by both LAG members as you did in the preparation phase. Verify that the data was distributed evenly across both OSA cards and that the data distribution is not overly uneven across both LAG members by computing the difference between the values that were collected before and after the test run.

Product Synonym

idaa

Was this topic helpful?

Document Information

Modified date:

08 August 2018

UID

swg27043945